CPU vs GPU render farm: What is the difference and which is the best for your projects?

CPU vs GPU render farm is born with a mission that helps your rendering process quickly, gently. What is CPU rendering and GPU rendering and what is the difference between them? In this post, we will show you all about CPU and GPU rendering.

Table of Contents

CPU vs GPU

Systems are being pushed to do more than ever before, whether for 3D rendering, processing Big Data, AI/deep learning/machine learning, or any demanding workload. A central processing unit (CPU) and a graphics processing unit (GPU) play altogether different parts.

What is a CPU?

CPU, which is composed of millions of transistors and can have multiple processing cores, is referred to as the computer’s brain. CPU is important to all modern computing systems since it runs the commands and operations required by your computer and operating system. It is also important in deciding how quickly applications may respond.

What is a GPU?

If CPU is the brain, GPU must be the soul. GPU is a processor that has many smaller and more specialized cores. When a processing task can be broken up and processed across many cores, the cores all work together to deliver massive performance.

What is the difference between CPU and GPU?

| CPU | GPU |

| Central Processing Unit | Graphics Processing Unit |

| Several cores | Many cores |

| Low latency | High throughput |

| Good for serial processing | Good for parallel processing |

| Can do a handful of operations at once | Can do thousands of operations at once |

Although CPU and GPU are both important in their own ways to process computing tasks, they have different architectures and are built for different purposes. Architecturally, CPU is made up of only a few cores with lots of cache memory that are able to manage a few software threads at a time. A GPU, on the other hand, is composed of hundreds of cores that can handle thousands of threads at the same time.

In other words, CPU is great at processing lots of general information accurately in serial (one at a time), while GPU is great at handling lots of very specific information and processing it quickly in parallel (many at a time).

CPU rendering vs GPU rendering

CPU rendering

CPU rendering is a technique that renders images solely using the CPU. There are several benefits to using CPU rendering.

Quality and Accuracy

Rendering takes time, and so does quality. Though it may take hours (or even days) to render an image, CPU rendering is more likely to deliver higher quality and clearer, noise-free images.

CPU has much fewer cores than GPU, but each core runs faster due to higher clock speed. Also, CPUs now have up to 64 cores which are able to perform excellent renders. When several CPUs are linked together and used in a render farm-like environment, for example, they may be able to produce a more beautiful final result than GPU rendering. Because there is no hard limit to rendering, CPU is the usual standard for producing high-quality frames and images in films.

Pixar is known for the exceptional visual quality of its movies by CPU rendering. Source: Brave – Pixar

RAM

CPU has access to the onboard random-access memory (RAM) allowing the user to easily render scenes with huge amounts of data. For example, the Threadripper 3990x can support 512 GB RAM while graphics cards only have up to 48GB VRAM. This massive amount of memory enables CPU to render any complex scene, even with 1TB+ textures or millions of polygons.

Unlike CPU, GPU is limited by the amount of VRAM not mention to the number or performance of graphics cards installed. The latest graphics card NVIDIA RTX 3090 has only 24 GB of VRAM, which is kind of enough for most users, but it can become a bottleneck in highly complex scenes with loads of elements.

Stability

CPU has been used for rendering for a long time, most bugs have been fixed. When using CPUs for rendering, this naturally leads to improved overall system stability. While GPU is more likely to have problems since driver updates, the incompatibility with certain software versions or systems can cause poor, unstable GPU performance, and in the worst case, crash.

Below are some works created by CPU-based render engine:

Vray Benchmark Scene

Secret Garden – Mushroom by Thomas Vournazos. Source: Corona Gallery

GPU rendering

GPU rendering is the process of using one or multiple graphics cards to render 3D scenes. There are several benefits to using GPU rendering over CPU rendering.

Speed

GPU rendering is generally (a lot) faster than CPU rendering. A GPU has thousands of cores (RTX 3090 has 10496 CUDA cores) while a CPU has only up to 64. Although running at a relatively low clock speed, the huge number of GPU cores make up for it, allowing strong rendering performance. The other reason is that GPU is designed to run tasks in a parallel manner. So it is able to render various elements of a scene simultaneously. This gives GPU speedy rendering time over CPU.

Flexibility & Scalability

Since GPUs execute the rendering tasks in parallel, they do scale linearly with more cores. In other words, you can more easily add more and more GPUs to your computer to increase performance. However, the number of GPU addable also depends on the 3D software. A few software only utilize 1 or 2 GPUs, but most renderers can support multiple GPUs. Some render farms offer 4/6/8/10 GPU packages allowing users to take advantage of the powerful multi-GPU rendering.

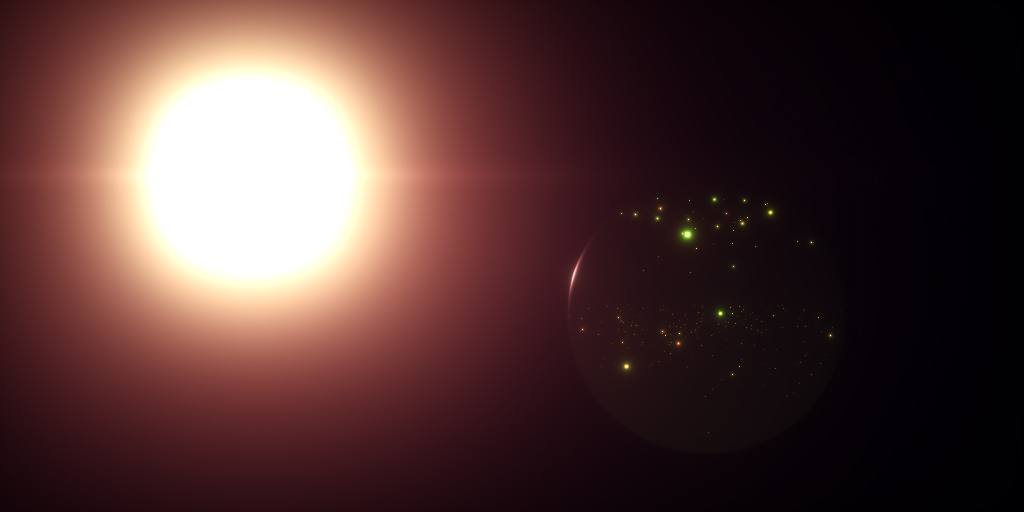

Below are some works created by GPU-based render engine:

Wormhole. Source: Otoy showcase

Rising Ruins by Hirokazu Yokohara. Source: Maxon Gallery

CPU vs GPU render farm: which to choose?

What are CPU and GPU render farm?

A Render Farm is a high-performance computer system, i.e. a cluster of individual computers linked together by a network connection. They are built to process or render Computer Generated Imagery (CGI), typically for Animated Films, CG Commercials, VFX Shots, or Rendered Still Images. Each individual computer is usually called a Render Node.

A Render Farm consists of many Render Nodes and one Render Manager (Farm Manager). A Render Manager is a software that manages any rendering/processing jobs. It will divide the jobs into smaller tasks and distribute them among all render nodes. Render nodes will listen to orders from Render Manager via a Render Client. Render Nodes and Render Manager are able to communicate with each other as they are connected to the same LAN network. Each of render nodes has all the necessary software (3D DCCs, renderers and plugins) to render/process the tasks received.

Then what is a CPU render farm, what is a GPU render farm? Simply understanding, a CPU render farm is a render farm that supports CPU rendering and a GPU render farm is a render farm that supports GPU rendering. Both CPU and GPU render farms have their own pros and cons. So to go for CPU render farm or GPU render farm, a few factors must be considered such as whether your render engine is CPU or GPU-based, whether the scenes need lots of RAM/VRAM, what is your more priority: quality or time, etc.

From Vfxrendering’s view, we will prefer GPU render farm for three main reasons.

1. “Render-able” vs “Render properly”

When looking for a render farm, the first thing we need to do is check what software and versions are supported. Most render farms currently support standard and popular 3D software, render engines or plugins. So you don’t need to worry too much if using these kinds of 3D packages. The problem only arises when you use special software. Such as software that supports only 1 GPU or real-time rendering; uncommon plugins; versions that are just released or too old. In this case, it will take you more time to find a suitable render farm that supports your software. However, you can always find the solution in one of the Top 5 Best Render Farms.

After finding a suitable render farm, the next step is to upload the project and take the results after the rendering is done. But at this point, render farm is considered simply as “render-able”, which means we can get the results after using the service.

The more important question is whether the results are what you expected. If yes, the render farm does “render properly”. On the contrary, we have to check if the textures, materials, etc are missing leading to incorrect renders.

Incorrect renders may happen due to the CPU render farm technology. As mentioned above, Farm Manager takes the project, breaks it down into small parts (buckets/frames) and distributes them among all render nodes. What if the render nodes don’t have the same hardware? Of course, the renders of those buckets/frames will be different, causing inconsistent final still images or sequences. A few seconds of the sequence might not be noticeable, but a still image is definitely clear with distinct square(s). Even just one faulty bucket or frame, the final render is defective and unusable.

On the other hand, GPU render farm can solve this problem. With multiple GPUs on a physical computer or with GPU virtualization, buckets/frames will be processed on identical configurations, ensuring correct final products.

That’s how we call “render-able” and “render properly”.

See more: Top 5 Best CPU & GPU Render Farms

2. Supported software

More and more 3D packages are supporting GPU rendering, to name a few, GPU-based renderers like Redshift, OctaneRender or real-time software like Lumion, Twinmotion, Unreal Engine. In addition, a lot of applications, which started with CPU rendering, have gradually expanded their support to GPU, including Vray, Maxwell, Arnold or in the upcoming time Houdini. It seems only Corona still keeps its CPU-based and has no intention of switching to GPU in the foreseeable future. Let’s see the list of several leading software in the industry and where they are CPU or GPU-based.

| CPU-based render engine | GPU-based render engine |

| Corona render Vray Maxwell render Arnold | Redshift OctaneRender Blender Prorender Vray Maxwell render Arnold Daz Studio Iray Keyshot Lumion Twinmotion Unreal Engine Enscape… |

3. Moving from CPU to GPU render farm

With all of the exciting new advancements in GPU technology, as well as the growing support for GPU rendering among major 3D packages, it appears that relying on CPU rendering will soon be a thing of the past. The trend to switch from CPU to GPU rendering is growing, and recognized by our community.

First of all, as we mentioned above, more and more 3D software is now supporting GPU render. Various 3D DCCs utilizing solely GPU are developed and released as well as many CPU-based software also extends support to GPU.

In recent years, GPU manufacturing has made huge advancements with many outstanding technologies, which increase its power (specifically, the rendering performance) many times with each new generation. Also, those limitations compared to CPU have been gradually improved and resolved. For example, NVLink is able to connect graphics cards to share memory (VRAM), or in other words, increase VRAM. Although the pooled VRAM is still much smaller than CPU’s usual memory, it is enough for almost complex scenes. Besides NVLink, some GPU Render Engines (Redshift, OctaneRender) support out-of-core features allowing us to use the system’s memory (RAM) instead when GPU runs out of VRAM.

GPU rendering is rapidly approaching CPU rendering, at this rate, the drawbacks we experience will be addressed in the near future.

Some thoughts

Both CPU rendering and GPU rendering have their own strengths and weaknesses. But in summary, CPU rendering is for you if you have highly complex projects, and need accuracy and stability. Meanwhile, GPU rendering will be a great choice if you need fast speed. You should consider carefully the requirements of your project, the software you are using and determine what will work best for you. Even so, we believe that GPU is the future of rendering because of its potential technology. We think many developers have the same idea as us.

These are our thoughts about CPU vs GPU render farm. What’s your experience with them? Let us know in the comment below. See you next time!

See more: Best GPU render farms

Pingback:Garage Render Farm: A Complete Overview - VFXRendering | 31 March, 2022

|

Pingback:Rebus Render Farm: A Complete Overview - VFXRendering | 31 March, 2022

|